Remote Live Music-Making With JamulusFor questions, contact [email protected].

If you feel moved to give back, consider making a contribution to C4. |

Audio RoutingIf you are satisfied with your existing Jamulus setup, don't feel compelled to read this section. It's aimed at those who want to extend their capabilities to do more advanced tricks.

We can use some additional software configuration to solve a few problems, which fall into two categories: things each participant can do to make themselves sound better, and things an engineer can do to control the livestream. Both involve chaining other audio software together with Jamulus. The Concept of RoutingUsing Jamulus by itself is relatively straightforward in terms of audio routing. You just select where your microphone input is coming from, and where the output sound should be sent (usually your headphones).

In order to do more with software, more complicated routing is required. For instance, in order to compress the microphone input, you might want to run the microphone audio into a DAW (digital audio workstation) to act as a compressor, then feed the DAW into Jamulus. At the other end, you might want to send the Jamulus output to both your headphones and recording software, to capture the performance. This requires a way of routing audio between applications. You might expect that this would involve some sort of patch panel that showed all your audio inputs and outputs (DAW, Jamulus, microphone, headphones), and let you connect one to another. But it generally works a little differently. Applications expect to choose their audio inputs and outputs. As we saw above, for instance, the Jamulus Settings window has a dropdown where you choose the inputs and outputs. DAW applications have similar settings. Each application expects to control its own destiny in that way, rather than having an output that a routing program would then connect to another application’s input. What is needed, therefore, is a set of “fake” inputs and outputs to act as places where one application can send its output, and another application can get its input. This is known as a virtual audio driver, because it appears to the system to be a normal audio driver with inputs and outputs, but is really just used to connect things together. To return to the compression example, let’s say that we want to send the microphone signal to a DAW to be compressed, then to Jamulus, then to the headphones. We set the DAW input to be our audio interface, selecting channel 1 because that’s where the microphone is plugged in. The output of the DAW is set to the virtual audio driver, and we send our compressed microphone signal to channel 1. Jamulus is configured to take its input from the virtual audio driver, channel 1. The Jamulus output is sent to our audio interface, to drive our headphones. This is a simple example; we will get much more complicated later. But you can see what the virtual audio driver does. It pretends to be an output device for the DAW, and pretends to be an input device for Jamulus. All it does internally is send output 1 directly to input 1. The confusing thing about this for me was that there isn’t actually a configuration for the virtual driver itself. There’s no patch panel showing how everything connects. Instead, you have to set up both the DAW and Jamulus in ways that collectively make sense, keeping everything straight in your head. Perhaps there is a higher-level program that can act as a patch panel, but it will be layered on top of all this, and still require configuration of each individual program. So far I have only used one virtual audio driver, but if we find others, we can add information about them. BlackHole is an audio routing driver for the Mac. Visit the link for download instructions. BlackHole acts like an audio interface with 16 inputs and 16 outputs, always wired together. It installed easily for me, and worked as I expected, once I figured out how to expect virtual audio drivers to work. SourceNexus is another product that (I think) does the same thing. Whatever routing software you use, just think of it as glue connecting together multiple audio applications. Aggregate DevicesThis section is Mac-centric. There may be equivalent software in other environments, and certainly the same problems need to be solved, but I'm not sure of the particulars.

Sometimes more complicated setups are necessary. Let’s say the microphone should go to the DAW for compression, then be sent to Jamulus. When the mix of everyone’s singing comes back from Jamulus, it goes back through the DAW to add reverb to the whole thing, then goes out to your headphones. So the routes needed are:

In this situation, Jamulus is still easy to configure. It takes its input from the virtual audio driver, and sends its output back to the virtual audio driver, expecting the DAW to be on the other end in both cases. But the DAW has a problem. It needs to get input both from the audio interface (for the microphone) and the virtual audio driver (for the output of Jamulus). And it needs to send its output both to the virtual audio driver (to send to Jamulus) and to the audio interface (for the headphones). Unfortunately, most applications expect to be configured with a single input device and a single output device. How do we allow the DAW to interact with multiple input and output devices? On the Mac, an extra bit of wizardry exists for this case, called an aggregate device. Basically, the aggregate device is a pseudo-device that is actually multiple real devices packaged together. So in the example above, the DAW talks to the aggregate device, which is really both the audio interface and the virtual routing device at the same time. To create an aggregate device, use the Audio MIDI Setup utility, which controls both MIDI and audio setup in MacOS. It is found in the Utilities subfolder of the Applications folder. Run the application and choose Window > Show Audio Devices (it may already be showing when you start the app). The audio devices window looks like this. On the left-hand side are all the devices (and virtual devices) in your system. In the picture above are:

What we want to do in the example described before is create a new pseudo-device that acts like both Quartet and BlackHole together. Instead of Quartet, with its 12 ins and 8 outs, and BlackHole, with its 16 ins and 16 outs, we will have a single mega-device with 28 ins and 24 outs. Some of those ins and outs will really be the Quartet, and some will be BlackHole. Then, the DAW can be configured to use that device for both input and output. By using the right inputs and outputs, it can communicate with both the Quartet and BlackHole at the same time. Complicated, but workable. To do this, click the + sign in the lower left of the window, and select Create Aggregate Device. Click the checkboxes on all the devices you want to be part of the aggregate. After I did that, my window looked like this. There is now a new device in the list on the left. I named it BlackHole/Quartet, and it has the + icon to indicate that it’s an aggregate device. The display on the right shows what each of the 28 inputs and 24 outputs maps to. The black channels on the left are, appropriately, BlackHole. The blue channels on the right are the Quartet. Now all I have to do is remember all those numbers, and then I can configure my DAW correctly.

I am not totally sure about the clock source and drift correction stuff. They have to do with keeping things in sync, so that all the devices stay locked at the same sample rate. You select one device as the master arbiter of the clock, and then click “Drift Correction” for all other devices in your aggregate. In the case above, BlackHole is the clock source, and the Quartet has drift correction enabled. I’m not sure if that’s the right way around. After you create a pseudo-device like this, you may need to restart your audio applications so that they recognize the new kid on the block. That was true of Jamulus, anyway. A note about the multi-output device, in case you are curious. It’s a different kind of pseudo-device that you also create using the + menu at the bottom left. Instead of the channels being aggregated, they all lay on top of each other. In this case, I created an output device. When you send to it, it mirrors the output to my built-in speakers, to the Quartet, and to BlackHole all at the same time. BlackHole recommends doing this if, for instance, you want to send your output to your headphones and record it at the same time. And a note about Audio MIDI Setup: For me anyway, it crashes a lot. I learned to stop blaming myself for it. Choosing a Digital Audio WorkstationThere are many Digital Audio Workstation (DAW) applications to choose from these days, including many free ones that do everything we need for this particular use case. I decided, based on 15 minutes’ research, to use Waveform Free, but you can choose a different one if you want.

However, there is one you should not use, sadly, and that is Garage Band, my usual go-to DAW, which I really like for a lot of reasons, including the fact that it’s free and bundled on every Mac. But it has two fatal flaws. The first is that it always uses a sample rate of 44,100 Hz. This is a fine sample rate, but Jamulus currently always uses a sample rate of 48,000 Hz, also a fine sample rate. If you try to use them together, they will compete to see who can set the audio interface’s sample rate to their preferred value. The other problem is that Garage Band always sends its output to the first two channels of the configured audio driver. Normally, this is not a problem for me. But it prevents any of the complicated routing described earlier. So as much I like it, I suggest not using it. Waveform Free is a free, reduced-capability version of the not-free Waveform Pro, from Tracktion. I chose it at semi-random, but so far it has done everything I need it to do, and I have been happy with it. I won't bother trying to list all the other options; there are many. Routing the DAW to and from JamulusTo use a DAW, we need to do a couple things. First, set the DAW sample rate to 48,000 Hz. As mentioned earlier, Jamulus is currently hard-coded to that (and the author of Jamulus has indicated that there are no plans to change), and we want to avoid conflicts. It has nothing to do with one sample rate being better than another, just compatibility. If you set the DAW to 44,100 Hz, then you will sound a few steps sharp coming out of Jamulus. It's a cool effect, but causes no end of headache.

Then, configure the correct input and output devices for both the DAW and Jamulus. This will vary depending on what you’re trying to do. If you want to pre-process your microphone input before sending to Jamulus:

If you want to post-process the Jamulus output before listening:

If you want to do both pre-processing and post-processing, you need to set up an aggregate device as described earlier. Then, set both Jamulus and the DAW to use the aggregate device for both input and output. Then, carefully select the right channels for everything. In the case of my BlackHole/Quartet aggregate device shown in the picture above, I would:

A Full SetupThe sections below describe how to configure the full DAW setup, using the same DAW session to both pre-process and post-process the Jamulus audio.

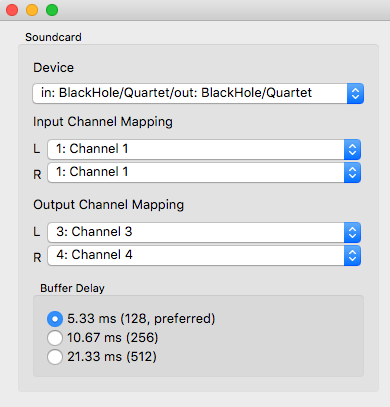

JamulusThe screenshot below shows the Jamulus setup window. We have created an aggregate BlackHole/Quartet device (described earlier in Aggregate Devices) and set it as both input and output for Jamulus. We could actually have used just the BlackHole device, but it is easier on the brain to use the same audio device for both Jamulus and the DAW, so things match up.

The input channels are set to channels 1 and 2, the first two BlackHole channels. We will need to configure the DAW to send our input signal to these channels. The output channels are set to channels 3 and 4, the next BlackHole stereo pair. The DAW will need to take input from these channels and send them to the headphones. That’s all we need to do for Jamulus. DAW SetupThis section shows how to set up Waveform Free specifically. If you are using a different DAW, it may be of less use, but will still show the kinds of things you need to do on other DAWs as well.

First, go to the Settings area using the bar at the top of the main window, and select the Audio Devices tab. The Input and Output dropdowns let you select the audio devices to be used for input and output. Select the devices appropriate for your use case, as described above. In the example shown below, I have selected the aggregate BlackHole / Quartet device that I configured earlier using the Audio MIDI Setup application (see Aggregate Devices above). The sample rate has been set to 48000 Hz, which is needed to match Jamulus. The audio buffer size is left at its default of 512 samples. On the right, you see the complete list of channels in our chosen audio input and output devices, meaning all 24 outs and 28 ins of the aggregate device. Enabling any of the input and output channels makes them available in the track dropdowns. Disabled channels effectively don’t exist, so you can avoid an overload of dropdown options. When you click on a specific channel, an option panel shows up at the bottom of the window, letting you choose whether to treat the channel as a stereo pair with its upper neighbor. In the screen shot, four stereo output pairs are enabled: the first three pairs of BlackHole (1+2, 3+4 and 5+6), and outputs 1 and 2 of the Quartet (which are routed to the headphones). Four inputs are enabled: the first three stereo pairs of BlackHole, and input 1 of the Quartet (a mono input from the microphone). One other piece of setup is required. We may use Waveform’s recording capabilities, but mainly we just want the signal to flow through the system, from the microphone to Waveform, to Jamulus, back to Waveform and out to the headphones. So we must turn on the Live Input Monitoring setting for all input tracks. Select each input track in turn and make sure that the Live Input Monitoring button is turned on in the panel at the bottom (as shown below). Normally, you might not want live monitoring, because it introduces latency, and you might hear a delayed version of yourself in the headphones. But for our use case, that’s not a problem.

Now we are ready to set up our “song”, the tracks that we will use to communicate with Jamulus. We need two tracks:

Create a song (Waveform calls them Edits) with two tracks. Click on the source selectors at the left of each track to choose their source. The input channels you enabled in the Audio Device setup page above will appear. In the example above, they are Input 1 + 2, Input 3 + 4, Input 5 + 6 and Analog 1. Select Analog 1 (the microphone) for the first track, and Input 3 + 4 (the second BlackHole stereo pair) for the second track. Arm the record function for both tracks by clicking on the red dots to the right of the source selectors, so that signal will flow through to the tracks. Now set the track outputs by clicking on the output selectors at the very right of each track. Just as for the inputs, you will see the channels you enabled during Audio Device setup. Select Output 1 + 2 (the first BlackHole stereo pair) as the output of the first track, and Playback 1 + 2 (the headphones) as the output of the second. You should now see something almost like the screen shot below. Waveform has many window configuration modes, so you may see other panels, and other ways of setting up what we just did, but the tracks should look vaguely like the below. So far, the DAW is acting as a very complicated conduit between our microphone and headphones on one side and Jamulus on the other. Let's do something with the sound.

First, we want to add compression to the first track. In the screen shot above, notice that I have added the AUDynamicsProcessor plugin to the first track. That plugin performs compression and noise gating. Specific compression plugins are beyond the scope of this document, and I don’t even know if this is the best one. It’s just the one I was familiar with from Garage Band. It is handy because clicking on it brings up a little graphical window that helps you set how much compression and gating to add. Feel free to use whatever compression plugin you want. Second, we want reverb on the second track. I accomplished this by adding the Redline Reverb plugin. Again, there are many ways to add reverb. The Moment of TruthNow we have:

Cross your fingers, put on the headphones, and whistle Beethoven’s 9th into the microphone. With any luck you should hear a compressed, delayed, reverbed version of yourself in the headphones. The input and output meters in the DAW and in Jamulus’ main window should all be dancing. Congratulations! If it isn’t working, try to see if you can trace how far the signal is getting. Can you see it in the first DAW track? Then, can you see it in Jamulus? Then, can you see it in the second DAW track? Good luck! Finally, let’s try to record ourselves. Press the record button in the DAW and sing something. Then press stop, rewind to the start, and press play. You should hear yourself in your full glory. Congratulations again! There are a couple caveats. First, even though you should hear the reverb, it’s not because it was captured on the recording. I think the plugins actually affect the sound post-recording. So the recorded sound is dry. The reason you hear reverb is that it is being applied to the sound as it’s played back. This should not be a problem, but if it is, you can probably rejigger the DAW signal path to apply reverb to the signal before recording. Second, note that both tracks recorded something, because both tracks were armed for recording. So you actually recorded both the dry unprocessed microphone input and the final Jamulus output. Other than wasting space, it should not be harmful. Also, be aware that, if you were to try to play back the lovely recording to your peers in the Jamulus session, you would hear the final mix, but your peers would only hear you, because that is what’s being routed to them through Jamulus. To allow them to hear the final mix, you will need to reroute the second track to Output 1 + 2 (which is Jamulus’ input). This could cause audio feedback, so you must also disarm the second track for recording. In general, you will need to think through a different set of track outputs and inputs in order to broadcast the recording to Jamulus. |

|

|

|

C4 is funded in part by:

C4 is a proud member of:

New York Choral Consortium |

Receive our newsletter:

Support C4's Mission!

|